I asked Gemini to create a Product Management text game

Gemini created the Kobayashi Maru of Product Management

Google’s AI Studio had a template prompt for “Build a dynamic text adventure game using Gemini and Imagen” and I thought I’d try it out. Once I told it not to use Imagen since it requires a paid API key, Gemini created something that was a standard text adventure when you start out in the woods and need to explore. Kinda of interesting, but I thought it would be fun to see what it came up with if I told it to create a text game where you are a product manager trying to ship a new product. What Gemini created was an unwinnable Kobayashi Maru situation. This unwinnable situation tells something both about Product Management, or at least how it is written about, and something about LLMs work.

For those not familiar with the Kobayashi Maru, it’s a training simulation in Star Trek designed to be unwinnable. Captain Kirk beats it by hacking the simulation. I’m pretty convinced I too would have to hack my simulator to win it.

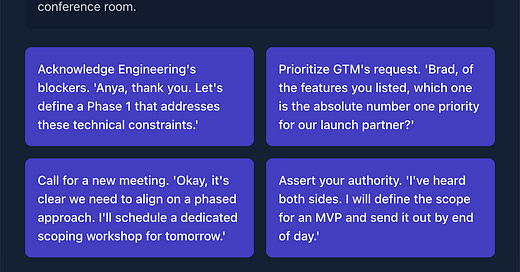

Here’s what the game looks like:

The generated app looks great.

At first, I was amazed by how compelling the game was. But as I played through, it was clear it would make mistakes. I’d be in a meeting talking to engineering, and the next response would get confused and think I was in a meeting with the GTM team. I kept playing to see if I could get to the end. I thought I was making progress, but a last-minute critical bug turned into the same scope/time trade-off from earlier in the game, omitting the fact that development was almost done.

It was at this point that I decided to look under the hood.

The prompts are way simpler than I originally thought

I had originally assumed the game was keeping all the history from each step of the game, like a ChatGPT chat does. But no, the prompt to the LLM to generate the next round is only some static overall instructions, the generated text story from the current round, and the choice the user made. Thus, the LLM loses all context from prior interactions. The lack of context is one of the reasons the game is unwinnable.

This situation inadvertently reveals a truth about product management: As a product manager, one good decision will not get you over the finish line. Accomplishing anything meaningful requires sustained effort. Occasionally, there will be a piece of low-hanging fruit, maybe a bug or tiny feature that you can prioritize, and it will quickly make a real impact. But your big wins require working day in and day out to make good decisions and overcome obstacles.

As far as I can tell, the no-history version of the game is unlikely to ever get to a win condition.

So I added history

Initially, I thought I would add a running history of all the text and choices and append that to the prompt. Then, it occurred to me that I could use the LLM to summarize each round, and that would be fewer input tokens the LLM would need to process every round. With the version of the model the app was generated to use, Gemini 2.5 Flash, the game with history was a little improved by not getting confused as to context and having better continuity, but still seemed like it would never get to a conclusion.

Things got interesting when I switched to Gemini 2.5 Pro. The text got more sophisticated, though each round took much longer to generate. The game started to take into account that I told it it was an Ad Tech company.

With history and the Pro model, the game got harder, and I actually managed to get unintentionally fired. In that instance of the game, engineering got mad that I said we’d figure out how to get the product shipped in the timeline without consulting them first, and it was all downhill from there. I don’t think it was possible to get fired in the original no-history game because that one just went around in circles.

What does this say about Product Management?

LLMs are like a big mirror that shows the conventional wisdom as represented by the bulk of documents on the public internet. The better model with history made the game more difficult because the majority of the written material on Product Management on the Internet is pretty negative. The game took on the valence of the myriad of r/ProductManagement threads on Reddit, where product managers are caught between uncooperative engineering managers, unreasonable sales teams, and unhelpful management. These threads, combined with standard game mechanics where play shouldn’t be easy, one ends up with a game that is unwinnable, a Kobayashi Maru but without the intention of being a teachable moment that the Kobayashi Maru has.

Internet postings on all subjects do lean negative for the simple reason that people with problems they are struggling to solve are the ones who post the most frequently. Most people who are succeeding don’t post for advice. Even so, I think this negative loop, where it’s impossible to get sales teams to accept engineering realities, is all too common. I think there are too many product managers out there who are struggling in difficult or impossible circumstances, and the LLM-generated game reflects that.

Do LLMs write good code?

The look of the game is good, and it ran out of the box, which is better than some of my previous experiences with LLM code. However, the original code is unplayable because it kept bombing out with this message:

At first, I thought this was happening because of the choices I made in the game. It’s a bit confusing as a result. I looked into the code, and found that this cryptic message is the result of an API error due to the Gemini service being overloaded, which happens about 10% to 20% of the time

I fixed it by modifying the code to add a retry loop so one error wouldn’t bomb the game. Should the LLM have done a better job? Probably. To get a usable game, it needed a human who knew enough about programming to identify the issue. I fixed it manually. Maybe I could have instructed the LLM to fix it, but I would still have needed to know how to fix it.

So, the LLM has laid out a workable framework, but vibe coding wouldn’t have gotten there. The lack of history in the prompts is another thing that needed human intelligence to fix. Adding history makes the number of tokens the LLM has to process scale up with how long the game is played, which is perhaps why the original version left it out.

One other note: I tried the Gemini Flash Lite model, which worked but made everything too simplistic. The model complexity does matter, particularly when there is no fine-tuning of the model and the prompts leave a lot of discretion to the model.

If you want to try to out for yourself

My adech-pm-simulator repo is on GitHub. You’ll need to create a Gemini API key and create an .env.local with the following line (substituting your API key):

GEMINI_API_KEY=PLACEHOLDER_API_KEY